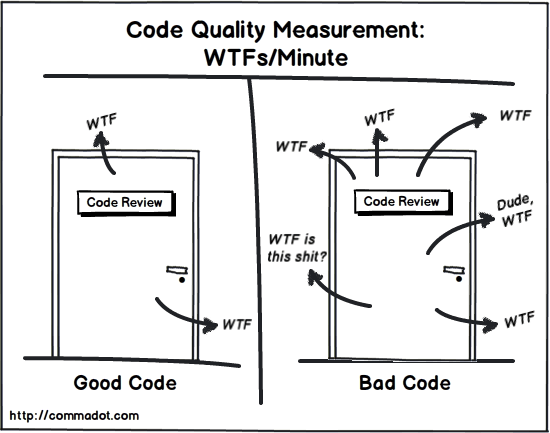

Importance of Maintaining The Quality of Code

- Good quality code is an essential property of a software because it could lead to financial losses or waste of time needed for further maintenance, modification or adjustments if code quality is not good enough.

Readable

- The ability of allowing the code to be easily, quickly, and clearly understandable by someone new or someone that hasn't seen it in a while.

- Ensures that everyone can understand the code written by everyone else.

- If the code is messy and badly written, it would be very hard to understand what the code does and where changes need to be made.

- This could waste much time trying to figure out how it all fits together before making any action and even end up re-writing the again assuming that it is buggy and carelessly written.

Efficiency

- Directly related to the performance and speed of running the software.

- The quality of the software can be evaluated with the efficiency of the code used.

- No one likes to use a software that takes too long to perform an action.

Reliability

- Ability to perform consistent and failure-free operations every time it runs.

- The software would be very less useful if the code function differently every time it runs even with the same input in same environment and if it breaks down often without throwing any errors.

Robustness

- Ability to cope with errors during program execution even under unusual condition.

- Image how would you feel when you use a software that keep showing strange and unfamiliar message when you did something wrong.

- Software is typically buggy and fragile but it should handle any errors encountered gracefully.

Portability

- Ability of the code to be run on as many different machines and operating systems as possible.

- It would be a waste of time and energy for programmers to re-write the same code again when it transferred from one environment to another.

Maintainability

- Code that is easy to add new features, modify existing features or fix bugs with a minimum of effort without the risk of affecting other related modules .

- Software always needs new features or bug fixes. So the written code must be easy to understand, easy to find what needs to be change, easy to make changes and easy to check that the changes have not introduced any bugs.

Different Approaches Used to Measure The Quality of Code

Reliability

- Reliability measures the probability that a system will run without failure over a specific period of operation.

- It relates to the number of defects and availability of the software.

- Number of defects can be measured by running a static analysis tool.

- Software availability can be measured using the mean time between failures(MTBF).

- Low defect counts are especially important for developing reliable codebase.

Testability

- Testability measures how well the software supports testing efforts. It relies on how well you can control, observe, isolate, and automate testing, among other factors.

- Testability can be measured based on how many test cases you need to find potential faults in the system.

- Size and complexity of the software can impact testability.

- So, applying methods at the code level such as cyclomatic complexity can help you improve the testability of the component.

Maintainability

- Maintainability measures how easily software can be maintained.

- It relates to the size, consistency, structure, and complexity of the codebase.

- And ensuring maintainable source code relies on a number of factors, such as testability and understandability.

- You can’t use a single metric to ensure maintainability.

- Some metrics you may consider to improve maintainability are number of stylistic warnings and Halstead complexity measures.

- Both automation and human reviewers are essential for developing maintainable codebases.

Portability

- Portability measures how usable the same software is in different environments.

- It relates to platform dependency.

- There isn’t a specific measure of portability. But there are several ways you can ensure portable code.

- It’s important to regularly test code on different platforms, rather than waiting until the end of development.

- It’s also a good idea to set your compiler warning levels as high as possible and use at least two compilers.

- Enforcing a coding standard also helps with portability.

Reusablitiy

- Reusability measures whether existing assets such as code can be used again.

- Assets are more easily reused if they have characteristics such as modularity or loose coupling.

- Reusability can be measured by the number of interdependencies.

- Running a static analyzer can help you identify these interdependencies.

Available Tools to Maintain Code Quality

Collaborator

- Collaborator is the most comprehensive peer code review tool, built for teams working on projects where code quality is critical.

- See code changes, identify defects, and make comments on specific lines. Set review rules and automatic notifications to ensure that reviews are completed on time.

- Custom review templates are unique to Collaborator. Set custom fields, checklists, and participant groups to tailor peer reviews to your team’s ideal workflow.

- Easily integrate with 11 different SCMs, as well as IDEs like Eclipse & Visual Studio.

- Build custom review reports to drive process improvement and make auditing easy.

- Conduct peer document reviews in the same tool so that teams can easily align on requirements, design changes, and compliance burdens.

Review Assistant

- Review Assistant is a code review tool.

- This code review plug-in helps you to create review requests and respond to them without leaving Visual Studio.

- Review Assistant supports TFS, Subversion, Git, Mercurial, and Perforce.

- Simple setup: up and running in 5 minutes.

Key Features

- Flexible code reviews

- Discussions in code

- Iterative review with defect fixing

- Team Foundation Server integration

- Flexible email notifications

- Rich integration features

- Reporting and Statistics

- Drop-in Replacement for Visual Studio Code Review Feature and much more

Codebrag

- Codebrag is a simple, light-weight, free and open source code review tool which makes the review entertaining and structured.

- Codebrag is used to solve issues like non-blocking code review, inline comments & likes, smart email notifications etc.

- With Codebrag one can focus on workflow to find out and eliminate issues along with joint learning and teamwork.

- Codebrag helps in delivering enhanced software using its agile code review.

- License for Codebrag open source is maintained by AGPL.

Gerrit

- Gerrit is a free web-based code review tool used by the software developers to review their code on a web-browser and reject or approve the changes.

- Gerrit provides the repository management for Git.

- Gerrit can be integrated with Git which is a distributed Version Control System.

- Gerrit is also used in discussing a few detailed segments of the code and enhancing the right changes to be made.

- Using Gerrit, project members can use rationalized code review process and also the extremely configurable hierarchy.

Codestriker

- Codestriker is an open source and free online code reviewing web application that assists the collaborative code review.

- Using Codestriker one can record the issues, comments, and decisions in a database which can be further used for code inspections.

- Codestriker supports traditional documents review. It can be integrated with ClearCase, Bugzilla, CVS etc.

- Codestriker is licensed under GPL.

Veracode

- Veracode (now acquired by CA Technologies) is a company which delivers various solutions for automated & on-demand application security testing, automated code review etc.

- Veracode is used by the developers in creating secured software by scanning the binary code or byte code in place of source code.

- Using Veracode one can identify the improper encrypted functionalities, malicious code and backdoors from a source code.

- Veracode can review a large amount of code and returns the results immediately.

- To use Veracode there is no need to buy any software or hardware, you just need to pay for the analysis services you need.

Dependency/Package Management Tools

- Most digital services will rely on some third-party code from other software to work properly.

- This is called a dependency.

- A dependency is something you rely upon to achieve a goal but that is not under your control.

- Project dependencies come in many shapes and sizes.

- They include hardware, software, resources, and people. Your software project will rely on a large number of dependencies, regardless of the size of the your technology stack, or the available human and financial resources.

- It is tempting to look at each dependency as a single independent unit.

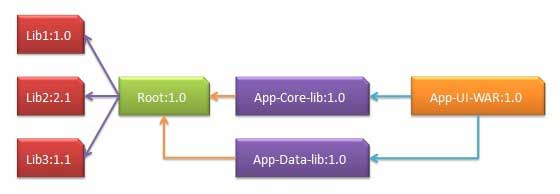

- However, this type of isolated dependency is rare. In general, each dependency is part of a complex web of interconnected relationships that is hard to untangle.

- You’ll need to manage any dependencies in your service carefully to keep your code up to date , system secure and service working as intended.

- A common approach, especially in the open source community, is to use a dependency management tool.

- This pulls in third-party dependencies automatically at runtime, deploy time or compile time.

- Even if you’re using a dependency management tool, you shouldn’t just trust a dependency without testing it first.

- This includes how secure it is. For example, if a library used to generate a web form introduces an SQL injection vulnerability , then your acceptance tests should fail.

- You will need to trust the specific code and version you are using , not just the general library or framework it belongs to.

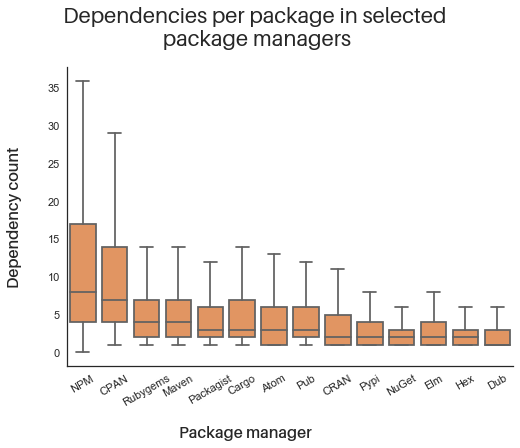

- As demonstrated in the graphic above, the typical number of dependencies for a representative open source package varies widely between ecosystems.

- In the modern era of software development, developers incorporate many distinct open source packages into their applications to help speed up development time and improve software quality.

- The actual counts can vary across developers and ecosystems—for example, a Python developer might be less likely to pull in dozens of additional packages than a JavaScript developer would, for example.

- But regardless of the programming language, each new package pulls in a network of additional (so called transitive) dependencies of its own.

- As we illustrate above, the average package in most ecosystems pulls in an additional five dependencies, adding to the overall software complexity.

- Software today is like an iceberg: you may actively pull in just a few dependencies yourself, but those known dependencies are only a small percentage of your actual dependency tree.

- The additional dependencies brought in by packages that your application relies on are equally important to the security, licensing, and future performance of your software.

- The open source that we all rely on extends far beyond the first layer of packages in our applications.

- We should seek to understand and ensure the health of every package we use, whether hidden in our transitive dependencies or not.

- Let’s look at three common tools of dependency management.

NuGet

- NuGet is the package manager for the Microsoft development platform including .NET.

- The NuGet client tools provide the ability to produce and consume packages.

- The NuGet Gallery is the central package repository used by all package authors and consumers.

- When you use NuGet to install a package, it copies the library files to your solution and automatically updates your project (add references, change config files, etc.).

- If you remove a package, NuGet reverses whatever changes it made so that no clutter is left.

Composer

- This dependency manager for PHP lets you create a composer.json file in your project root , run a single command , and all your dependencies are down;oaded ready to use.

- Composer is not a package manager in the same sense as Yum or Apt are.

- Yes, it deals with “packages” or libraries, but it manages them on a per-project basis, installing them in a directory (e.g. vendor) inside your project.

- By default it does not install anything globally. Thus, it is a dependency manager.

- It does however support a “global” project for convenience via the global command.

Nanny

- Nanny is a dependency management tool for managing dependencies between your projects.

- Unlike tools like Maven, Nanny can be used for arbitrary dependencies and is easy to use.

- Nanny lets you specify dependencies to your project, and Nanny will go ahead and pull in all the dependencies (and everything those dependencies are dependent on) into the _deps folder in your project.

- Nanny makes it easy to create dependencies and manage dependency versions.

Bower

- Bower is a package manager for the web.

- Bower lets you easily install assets such as images, CSS and JavaScript, and manages dependencies for you.

- Bower can manage components that contain HTML, CSS, JavaScript, fonts or even image files.

- Bower doesn’t concatenate or minify code or do anything else.

- It just installs the right versions of the packages you need and their dependencies.

Pintjs

- Pint is a small, asynchronous, dependency aware wrapper around Grunt attempting to solve some of the problems that accompany a build process at scale.

- A typical Gruntfile starts with, at a minimum, some variation of: jsHint, jasmine, LESS, handlebars, uglify, copy, and clean stack.

- Just these half dozen or so plugins can balloon your Gruntfile upwards of 300 lines and when you add complex concatenation, cache busting, and versioning can cause it to grow well in to the 1000+ lines.

- Pint allows you to break up and organize your build into small testable pieces.

Jam

- Jam is a package manager for JavaScript. Unlike other repositories, they put the browser first.

- Using a stack of script tags isn’t the most maintainable way of managing dependencies; with Jam packages and loaders like RequireJS you get automatic dependency resolution.

- You can achieve faster load times with asynchronous loading and the ability to optimize downloads.

- JavaScript modules and packages provide properly namespaced and more modular code.

Volo

- Volo is a tool for creating browser based, front end projects from project templates and add dependencies by fetching them from GitHub.

- Once your project is set up, automate common tasks.

- Volo is dependency manager and project creation tool that favors GitHub for the package repository.

- At its heart, volo is a generic command runner — you can create new commands for volo, and you can use commands others have created.

NPM

- npm is the package manager tool for JavaScript.

- Find , share and reuse packages of code from hundreds of thousands of developers and assemble them in powerful new ways.

- Dependencies can be updated and optimized right from the terminal.

- And you can build new projects with dependency files and version numbers automatically pulled from the package.json file.

What are the difference between Maven and Ivy?

| Maven | Ivy |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Build Tools

- Build tools are programs that automate the creation of executable applications from source code.

- Building incorporates compiling, linking and packaging the code into a usable or executable form.

- In small projects, developers will often manually invoke the build process.

- This is not practical for larger projects, where it is very hard to keep track of what needs to be built, in what sequence and what dependencies there are in the building process.

- Using an automation tool allows the build process to be more consistent.

- The primary purpose of the first build tools, such as the GNU make and "makedepend" utilities, commonly found in Unix and Linux-based operating systems, was to automate the calls to the compilers and linkers.

- Today, as build processes become ever more complex, build automation tools usually support the management of the pre- and post-compile and link activities, as well as the compile and link activities.

- Modern build tools go further in enabling work flow processing by obtaining source code, deploying executables to be tests and even optimizing complex build processes using distributed build technologies, which involves running the build process in a coherent, synchronized manner across several machines.

- Here are a few things to look for when selecting a build tool.

Speed

- Ideally, you want your build tool to be fast in execution as there’s much need for speed when iterating on a website or app.

- Also, when changing a line of code, you want to reload the page to see the changes instantly.

- Disrupting that process could slow down productivity.

Community Driven

- The tool you select should have a healthy community of developers that exchange plugins and are continually adding functionality to support it.

Modular and Flexible

- Even the most advanced tool has its limits.

- Tools that are extensible allow you to add your own custom functionality giving you the flexiblity to adjust as you see fit.

Significance of using a build tool in large scale software development

Organization and Maintenance of Software Components

- This is one of the big tasks of large-scale software development.

- The system must be arranged into a set of small, manageable components that interact with each other.

- The interaction should take place through well-defined, organized interfaces, simplifying the task of managing and maintaining the components.

- Typically, time constraints and insufficient experience combine to introduce defects in the way software systems are architected, leading to decreased quality and larger overheads in managing and maintaining the system.

- As an answer to this problem, a number of studies have measured software systems for complexity, which has led to a standardized set of software quality metrics.

- While there's no substitute for experienced project managers, the metrics do offer insight into assessing software complexity and quality.

- It's also important to be able to detect inconsistencies in the program as it changes.

- Typically, a program may be changed in one place, but the effect of these changes in other places is overlooked.

- For example, by changing a type it's possible to make an existing type cast located in a different module no longer necessary, and also overlook this type cast.

Time Constraints

- A problem faced by large software systems development (in any language) is waiting for the system to be rebuilt after every small change.

- The time required to rebuild after each change increases with the size of the system, adding up to expensive overhead costs.

- After a certain point, the necessary, endless rebuilds significantly reduce productivity.

- Organizing a system into well-architected components and reusable libraries goes a long way toward solving this problem.

- Yet developer tools still need to be smart about how much rebuilding they have to do for each small change.

- The best solution to this problem is incremental development environments such as VisualAge.

- They recompile only the minimum amount necessary when a system is changed.

- This concept of incrementality can also be extended to other activities beyond the standard development steps to include QA, testing and so forth.

Memory Management

- Large systems tend to use a lot of memory, and unless it's managed carefully the capacity of the underlying hardware can quickly be exhausted.

- In systems written in C and C++, the developer has complete responsibility for making sure that unused memory is recycled for future use rather than retained indefinitely.

- Java addresses this problem with automatic garbage collection, i.e., the Java Virtual Machine periodically searches for memory that is no longer in use and recycles it for future use.

- Unfortunately, garbage collection can take a significant amount of time when systems use a lot of memory, severely contributing to performance degradation.

- Most Java programmers today assume that they have to live with this in large Java programs.

- The solution is to actively manage memory, and simply let the garbage collector kick in for the smaller chunks of memory as well as what slips through the cracks of the explicit memory management routines.

- Hopefully, better garbage collection algorithms will become available shortly in Java Virtual Machines and the problems related to garbage collection will soon be a memory; the next six months will reveal this possibility.

Safety

- When building large systems a lot of assumptions are made regarding how the system works.

- If the system is correct, these assumptions are met by the system execution.

- However, since bugs in software are always expected, these assumptions may not always hold.

- The debugging process essentially means running the system in a controlled manner to determine if these assumptions are met, and looking for ways to make corrections when they aren't.

- In mission-critical systems some assumptions are important and require enforcement.

- Similarly, in multithreaded systems where it's often impossible to reproduce a problem, the violation of any assumption must be reported.

- Providing diagnostic APIs solves this problem, allowing assumptions to be built into the program as constraints that must hold during the program's execution.

- Tools to help manage them are needed to encourage users to write such constraints.

- One important capability necessary to encourage using diagnostic constructs is an easy way to strip out these constructs when it's time to package the system for final shipping.

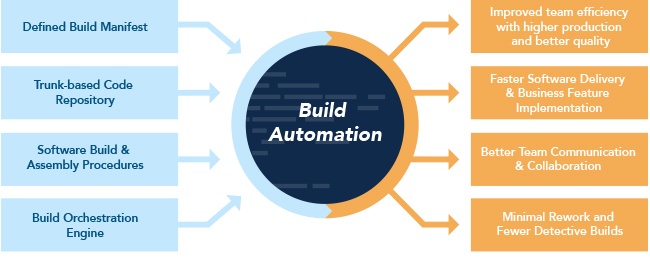

Build Automation

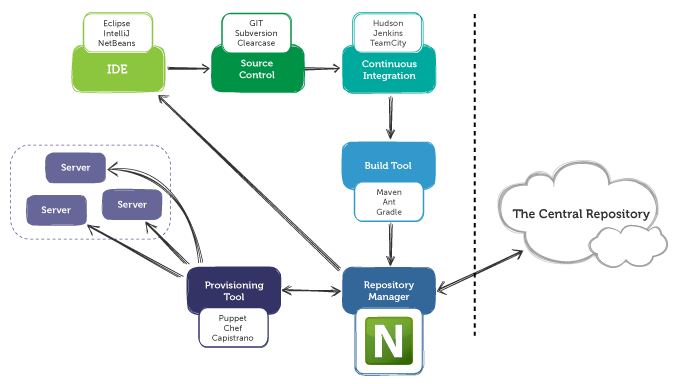

- Build Automation is the process of scripting and automating the retrieval of software code from a repository, compiling it into a binary artifact, executing automated functional tests, and publishing it into a shared and centralized repository.

- Also build automation is the process of automating the creation of a software build and the associated processes including compiling computer source code into binary code and running automated tests.

- Build-automation utilities allow the automation of simple, repeatable tasks.

- When using the tool, it will calculate how to reach the goal by executing tasks in the correct, specific order and running each task.

- The two ways build tools differ are task-oriented vs. product-oriented. Task-oriented tools describe the dependency of networks in terms of a specific set task and product-oriented tools describe things in terms of the products they generate.

- Automation is achieved through the use of a compile farm for either distributed compilation or the execution of the utility step.

- The distributed build process must have machine intelligence to understand the source-code dependencies to execute the distributed build.

Advantages

- A necessary pre-condition for continuous integration

- Improve product quality

- Accelerate the compile and link processing

- Eliminate redundant tasks

- Minimize "bad builds"

- Eliminate dependencies on key personnel

- Have history of builds and releases in order to investigate issues

- Save time and money - because of the reasons listed above

Build Tools

LambdaTest

- LambdaTest is a scalable cloud-based cross browser testing platform designed to offer all software testing need to cloud infrastructure.

- According to the vendor, LambdaTest platform helps ensure web app elements (such as JavaScript, CSS, HTLM5, Video...etc.) render seamlessly across every desktop...

Service Control

- ServiceControl is an identity management solution that is designed to provide a simpler way to create, manage, and audit accounts across multiple systems.

- This software is targeted at solution architects, IDM and IAM project managers, line-of-business application owners, and busy IT administrator.

Apache Ant With Ivy

- Ant is java library, which helps to drive the process defined in the build file.

- Mainly Ant is used to build java applications.

- Ant is very flexible , it does not impose any rules like coding conventions , directory structure.

- Ivy is a sub project of Ant , which acts as a dependency manager.

Gradle

- Gradle is built upon the concepts of ant and maven.

- Gradle uses groovy scripts for declaring project configuration.

- Gradle was designed for multi-project builds and supports incremental builds by determining which parts of the build are up-to-date.

- Ant is mostly treated as legacy right now. Industry going forward with Gradle build tool.

- I personally feel, Ant and Maven still we can use, it mainly depends on the project.

- Sometimes we can use a combination of Ant and Gradle, Maven and Gradle, or even three together.

Apache Maven

- Maven is more than a build tool.

- Maven even describes how software is built and helps in dependency management also.

- Maven is used mainly for java based projects.

Maven Build Life Cycle

- Maven is based around the central concept of a build lifecycle.

- What this means is that the process for building and distributing a particular artifact (project) is clearly defined.

- For the person building a project, this means that it is only necessary to learn a small set of commands to build any Maven project, and the POM will ensure they get the results they desired.

- Each of these build lifecycles is defined by a different list of build phases, wherein a build phase represents a stage in the lifecycle.

- For example, the default lifecycle comprises of the following phases.

Validate

- Validate the project is correct and all necessary information is available.

Compile

- Compile the source code of the project.

Test

- Test the compiled source code using a suitable unit testing framework.

- These tests should not require the code be packaged or deployed.

Package

- Take the compiled code and package it in its distributable format, such as a JAR.

Verify

- Run any checks on results of integration tests to ensure quality criteria are met.

Install

- Install the package into the local repository, for use as a dependency in other projects locally.

Deploy

- Done in the build environment, copies the final package to the remote repository for sharing with other developers and projects.

Gradle Build Life Cycle

- We said earlier that the core of Gradle is a language for dependency based programming.

- In Gradle terms this means that you can define tasks and dependencies between tasks.

- Gradle guarantees that these tasks are executed in the order of their dependencies, and that each task is executed only once.

- There are build tools that build up such a dependency graph as they execute their tasks.

- Gradle builds the complete dependency graph before any task is executed.

- This lies at the heart of Gradle and makes many things possible which would not be possible otherwise.

- A Gradle build has three distinct phases.

Initialization

- Gradle supports single and multi-project builds.

- During the initialization phase, Gradle determines which projects are going to take part in the build, and creates a project instance for each of these projects.

Configuration

- During this phase the project objects are configured.

- The build scripts of all projects which are part of the build are executed.

Execution

- Gradle determines the subset of the tasks, created and configured during the configuration phase, to be executed.

- The subset is determined by the task name arguments passed to the gradle command and the current directory.

- Gradle then executes each of the selected tasks.

Maven

- Maven is a build automation tool used primarily for Java projects.

- Maven addresses two aspects of building software: first, it describes how software is built, and second, it describes its dependencies.

- Maven is a project management and comprehension tool that provides developers a complete build lifecycle framework.

- Development team can automate the project's build infrastructure in almost no time as Maven uses a standard directory layout and a default build lifecycle.

- In case of multiple development teams environment, Maven can set-up the way to work as per standards in a very short time.

- As most of the project setups are simple and reusable, Maven makes life of developer easy while creating reports, checks, build and testing automation setups.

- To summarize, Maven simplifies and standardizes the project build process.

- It handles compilation, distribution, documentation, team collaboration and other tasks seamlessly.

- Maven increases reusability and takes care of most of the build related tasks.

- The primary goal of Maven is to provide developer with a comprehensive model for projects, which is reusable, maintainable, and easier to comprehend and plugins or tools that interact with this declarative model.

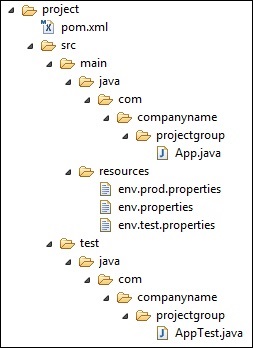

- Maven project structure and contents are declared in an xml file, pom.xml, referred as Project Object Model (POM), which is the fundamental unit of the entire Maven system.

Features of Maven

Model based builds

- Maven is able to build any number of projects into predefined output types such as jar, war, metadata.

Coherent site of project information

- Using the same metadata as per the build process, maven is able to generate a website and a PDF including complete documentation.

Release management and distribution publication

- Without additional configuration, maven will integrate with your source control system such as CVS and manages the release of a project.

Backward compatibility

- You can easily port the multiple modules of a project into Maven 3 from older versions of Maven. It can support the older versions also.

Parallel Builds

- It analyzes the project dependency graph and enables you to build schedule modules in parallel.

- Using this, you can achieve the performance improvements of 20-50%.

Better error and integrity reporting

- Maven improved error reporting, and it provides you with a link to the Maven wiki page where you will get full description of the error.

How Maven Uses Conventions Over Configurations

- Convention over configuration is one of the main design philosophies behind apache maven.

- Maven is directed by a configuration file called pom.xml.

- This may also be distributed in a project hierarchy where a "parent" pom file calls subsequent pom.xml files lower in the build hierarchy.

- Maven also has default targets which perform tasks defined by convention.

- All operations can be modified and expanded with more detail.

- This is also in contrast to Ant which requires one to define all targets and behavior.

- Let's go through a few examples.

- A complete Maven project can be created using the following configuration file

<project>

<modelVersion>4.0.0</modelVersion>

<groupId>com.packt</groupId>

<artifactId>sample-one</artifactId>

<version>1.0.0</version>

</project>

- If someone needs to override the default , conventional behavior of Maven , then it is possible too.

- The following example pom.xml file shows how to override some of the preceding default values.

<project>

<modelVersion>4.0.0</modelVersion>

<groupId>com.packt</groupId>

<artifactId>sample-one</artifactId>

<version>1.0.0</version>

<packaging>jar</packaging>

<build>

<sourceDirectory>${basedir}/src/main/java</sourceDirectory>

<testSourceDirectory>${basedir}/src/test/java

</testSourceDirectory>

<outputDirectory>${basedir}/target/classes

</outputDirectory>

</build>

</project>

Build Profile In Maven

- A Build profile is a set of configuration values, which can be used to set or override default values of Maven build.

- Using a build profile, you can customize build for different environments such as Production v/s Development environments.

- Profiles are specified in pom.xml file using its activeProfiles/profiles elements and are triggered in variety of ways.

- Profiles modify the POM at build time, and are used to give parameters different target environments (for example, the path of the database server in the development, testing, and production environments).

- Build profiles are majorly of three types.

Per project

- Defined in the project POM file, pom.xml

Per user

- Defined in Maven settings xml file (%USER_HOME%/.m2/settings.xml)

Global

- Defined in Maven global settings xml file (%M2_HOME%/conf/settings.xml)

- Now, under src/main/resources , there are three environment specific files.

env.properties

- Default configuration used if no profile is mentioned.

env.test.properties

- Test configuration when test profile is used.

env.prod.properties

- production configuration when prod profile is used.

- Profiles are specified using a subset of the elements available in the POM itself (plus one extra section), and are triggered in any of a variety of ways. They modify the POM at build time, and are meant to be used in complementary sets to give equivalent-but-different parameters for a set of target environments (providing, for example, the path of the appserver root in the development, testing, and production environments). As such, profiles can easily lead to differing build results from different members of your team. However, used properly, profiles can be used while still preserving project portability.

Find about maven build life cycle and phases in the upper of the article!

Maven Goals

- Each phase is a sequence of goals , and each goal is responsible for a specific tasks.

- When we run a phase – all goals bound to this phase are executed in order.

- Here are some of the phases and default goals bound to them

Compiler

- The compile goal from the compiler plugin is bound to the compile phase.

Surefire

- Test is bound to test phase.

Install

- Install is bound to install phase.

Jar and War

- War is bound to package phase.

Dependency Management in Maven

- One of the core features of Maven is Dependency Management.

- Managing dependencies is a difficult task once we've to deal with multi-module projects (consisting of hundreds of modules/sub-projects).

- Maven provides a high degree of control to manage such scenarios.

Transitive Dependency Discovery

- It is pretty often a case, when a library, say A, depends upon other library, say B.

- In case another project C wants to use A, then that project requires to use library B too.

- Maven helps to avoid such requirements to discover all the libraries required.

- Maven does so by reading project files (pom.xml) of dependencies, figure out their dependencies and so on.

- We only need to define direct dependency in each project pom.

- Maven handles the rest automatically.

- With transitive dependencies, the graph of included libraries can quickly grow to a large extent.

- Cases can arise when there are duplicate libraries.

- Maven provides few features to control extent of transitive dependencies.

Dependency Mediation

- Determines what version of a dependency is to be used when multiple versions of an artifact are encountered.

- If two dependency versions are at the same depth in the dependency tree, the first declared dependency will be used.

Dependency Management

- Directly specify the versions of artifacts to be used when they are encountered in transitive dependencies.

- For an example project C can include B as a dependency in its dependency Management section and directly control which version of B is to be used when it is ever referenced.

Dependency Scope

- Includes dependencies as per the current stage of the build.

Excluded Dependencies

- Any transitive dependency can be excluded using "exclusion" element.

- As example, A depends upon B and B depends upon C, then A can mark C as excluded.

Optional Dependencies

- Any transitive dependency can be marked as optional using "optional" element.

- As example, A depends upon B and B depends upon C. Now B marked C as optional. Then A will not use C.

Dependency Scope

- Transitive Dependencies Discovery can be restricted using various Dependency Scope as mentioned below.

Compile

- This scope indicates that dependency is available in classpath of project.

- It is default scope.

Provided

- This scope indicates that dependency is to be provided by JDK or web-Server/Container at runtime.

Runtime

- This scope indicates that dependency is not required for compilation, but is required during execution.

Test

- This scope indicates that the dependency is only available for the test compilation and execution phases.

System

- This scope indicates that you have to provide the system path.

Import

- This scope is only used when dependency is of type pom.

- This scope indicates that the specified POM should be replaced with the dependencies in that POM's <dependencyManagement> section.

- Usually, we have a set of project under a common project. In such case, we can create a common pom having all the common dependencies and then make this pom, the parent of sub-project's poms. Following example will help you understand this concept.

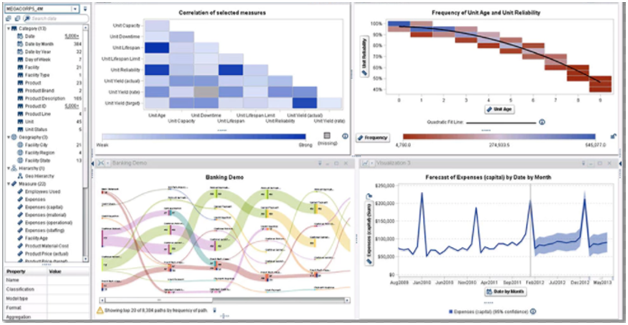

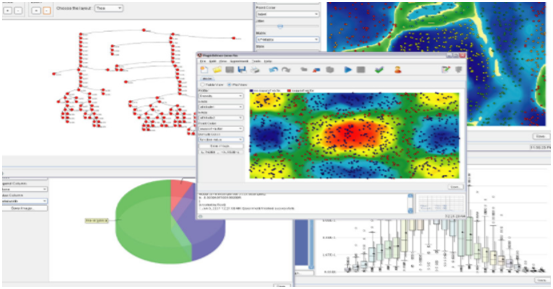

Contemporary Tools and Practices Widely Used in the Software Industry

- The growing demand and importance of data analytics in the market have generated many openings worldwide.

- It becomes slightly tough to shortlist the top data analytics tools as the open source tools are more popular, user-friendly and performance oriented than the paid version.

- There are many open source tools which doesn’t require much/any coding and manages to deliver better results than paid versions e.g. – R programming in data mining and Tableau public, Python in data visualization. Below is the list of top 10 of data analytics tools, both open source and paid version, based on their popularity, learning and performance.

R Programming

- R is the leading analytics tool in the industry and widely used for statistics and data modeling.

- It can easily manipulate your data and present in different ways.

- It has exceeded SAS in many ways like capacity of data, performance and outcome.

- R compiles and runs on a wide variety of platforms viz -UNIX, Windows and MacOS.

- It has 11,556 packages and allows you to browse the packages by categories.

- R also provides tools to automatically install all packages as per user requirement, which can also be well assembled with Big data.

Tableau Public

- Tableau Public is a free software that connects any data source be it corporate Data Warehouse, Microsoft Excel or web-based data, and creates data visualizations, maps, dashboards etc. with real-time updates presenting on web.

- They can also be shared through social media or with the client.

- It allows the access to download the file in different formats. If you want to see the power of tableau, then we must have very good data source.

- Tableau’s Big Data capabilities makes them important and one can analyze and visualize data better than any other data visualization software in the market.

Python

- Python is an object-oriented scripting language which is easy to read, write, maintain and is a free open source tool.

- It was developed by Guido van Rossum in late 1980’s which supports both functional and structured programming methods.

- Phython is easy to learn as it is very similar to JavaScript, Ruby, and PHP.

- Also, Python has very good machine learning libraries viz. Scikitlearn, Theano, Tensorflow and Keras.

- Another important feature of Python is that it can be assembled on any platform like SQL server, a MongoDB database or JSON.

- Python can also handle text data very well.

SAS

- Sas is a programming environment and language for data manipulation and a leader in analytics, developed by the SAS Institute in 1966 and further developed in 1980’s and 1990’s.

- SAS is easily accessible, managable and can analyze data from any sources.

- SAS introduced a large set of products in 2011 for customer intelligence and numerous SAS modules for web, social media and marketing analytics that is widely used for profiling customers and prospects.

- It can also predict their behaviors, manage, and optimize communications.

Apache Spark

- The University of California, Berkeley’s AMP Lab, developed Apache in 2009.

- Apache Spark is a fast large-scale data processing engine and executes applications in Hadoop clusters 100 times faster in memory and 10 times faster on disk.

- Spark is built on data science and its concept makes data science effortless.

- Spark is also popular for data pipelines and machine learning models development.

- Spark also includes a library – MLlib, that provides a progressive set of machine algorithms for repetitive data science techniques like Classification, Regression, Collaborative Filtering, Clustering, etc.

Rapidminer

- RapidMiner is a powerful integrated data science platform developed by the same company that performs predictive analysis and other advanced analytics like data mining, text analytics, machine learning and visual analytics without any programming.

- RapidMiner can incorporate with any data source types, including Access, Excel, Microsoft SQL, Tera data, Oracle, Sybase, IBM DB2, Ingres, MySQL, IBM SPSS, Dbase etc.

- The tool is very powerful that can generate analytics based on real-life data transformation settings, i.e. you can control the formats and data sets for predictive analysis.

Qlikview

- QlikView has many unique features like patented technology and has in-memory data processing, which executes the result very fast to the end users and stores the data in the report itself.

- Data association in QlikView is automatically maintained and can be compressed to almost 10% from its original size.

- Data relationship is visualized using colors – a specific color is given to related data and another color for non-related data.

Splunk

- Splunk is a tool that analyzes and search the machine-generated data.

- Splunk pulls all text-based log data and provides a simple way to search through it, a user can pull in all kind of data, and perform all sort of interesting statistical analysis on it, and present it in different formats.